Artificial intelligence models may soon fall into a doom spiral as machine-generated gibberish floods the internet.

It is no secret that generative AI must train on large swathes of data to generate an output.

However, that data must be “high-quality,” meaning accurate and reliable – and the tech giants know it, too.

ChatGPT developer OpenAI has partnered with newsmakers like Vox Media and News Corp to train its chatbots on fresh content.

But this may not be enough to slow the spread of synthetic data, which has flooded the internet since AI systems became widely accessible.

As companies like Google and Meta comb search engines and social media for training data, it is inevitable that they will encounter AI-generated content.

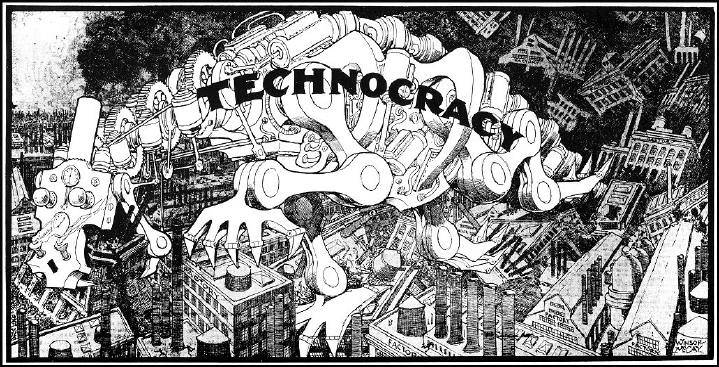

When this information is compiled into a dataset for an AI model, the result is the equivalent of inbreeding.

Systems become increasingly deformed as they learn from inaccurate, machine-generated content and spit falsities out.

This information then winds up in a dataset for a different model, and the process repeats, leading to a total meltdown.

Researcher Jathan Sadowski has been documenting the phenomenon on X for over a year.

He coined the term “Habsburg AI” in February 2023, taking the name from a notoriously inbred royal dynasty.

Sadowski defines it as “a system that is so heavily trained on the outputs of other generative AIs that it becomes an inbred mutant.”

The phenomenon takes many names. Other researchers know it as model autophagy disorder or MAD.

The term “autophagy” comes from the Greek “self-devouring,” aptly capturing the way a system trains itself on AI-synthesized content like a snake eating its own tail.

Researchers at Rice and Stanford University were among the first to discover that models decline in the quality and diversity of their output without a constant stream of quality data.

Complete autophagy occurs when a model is trained solely on its own responses, but machines can also train on data published by other AI programs.

“Training large-language models on data created by other models…causes ‘irreversible defects in the resulting models,'” Sadowski tweeted, referencing an article in the journal Nature.

Digital inbreeding harkens back to the idea of “model collapse,” where systems grow increasingly incoherent due to an influx of AI-generated content.

While the idea was once just theory, experts believe it is becoming increasingly likely as more and more synthetic data appears.

NewsGuard, a platform that rates the credibility of news sites, has been tracking the increase of “AI-enabled misinformation” online.

By the end of 2023, the group identified 614 unreliable AI-generated news and information websites, dubbed “UAINS.” That number has since swelled to 1,036.

The websites span over a dozen languages and have generic names like “Ireland Top News” and “iBusiness Day” that appear like legitimate outlets.

Chatbots and other generative AI models may train on this information, regurgitating falsities about news events, celebrity deaths, and more in their responses.

While some netizens could care less about the future of AI, the phenomenon, if unchecked, could have disastrous impacts on human users.

As media literacy declines and AI-generated content floods the internet, users may struggle to distinguish between factual information and machine-generated nonsense.

Researchers are patently aware of the risk, but it is unclear just how far AI developers are willing to go to prevent “inbreeding.”

After all, synthetic data is freely available and much cheaper to source. Some proponents argue it doesn’t fall victim to the same moral and ethical dilemmas as human-generated content.

“So weird that everybody else has to change what they’re doing to support the spread and integration of AI into our lives – but apparently the AI systems and tech start-ups don’t need to change at all,” Sadowski quipped in one post.

“They are perfect. We are the problems.”